SPECTRE Microprocessor Security Flaw - Big Brother = You

Politics / Intelligence Agencies Jan 11, 2018 - 12:59 PM GMTBy: Raul_I_Meijer

Happy belated new year. Belatedly. Thought I’d sit out a few days, since there wasn’t much news to be expected. And it did pan out that way, other than Trump bogarting the limelight; but then, that isn’t really news either. Anything he says or does triggers the expansive anti-Donald echo chamber into a daily frenzy. And frankly, guys, it’s not just boring, but you’re also continuously providing him with free publicity. At least make him work for some of it.

Happy belated new year. Belatedly. Thought I’d sit out a few days, since there wasn’t much news to be expected. And it did pan out that way, other than Trump bogarting the limelight; but then, that isn’t really news either. Anything he says or does triggers the expansive anti-Donald echo chamber into a daily frenzy. And frankly, guys, it’s not just boring, but you’re also continuously providing him with free publicity. At least make him work for some of it.

Then, however, the big microprocessor (chip) security ‘flaw’ was exposed. And that’s sort of interesting, because it concerns the basic architecture of basically every microchip produced in the past 20 years, even well before smartphones. Now, the first thing you have to realize is that we’re not actually talking about a flaw here, but about a feature. We use that line a lot in a half-jokingly version, but in this case it’s very much true. As Bloomberg succinctly put it:

All modern microprocessors, including those that run smartphones, are built to essentially guess what functions they’re likely to be asked to run next. By queuing up possible executions in advance, they’re able to crunch data and run software much faster. The problem in this case is that this predictive loading of instructions allows access to data that’s normally cordoned off securely..

Spectre fools the processor into running speculative operations – ones it wouldn’t normally perform – and then uses information about how long the hardware takes to retrieve the data to infer the details of that information. Meltdown exposes data directly by undermining the way information in different applications is kept separate by what’s known as a kernel, the key software at the core of every computer.

As I said: feature, not flaw (or two really, Spectre and Meltdown). And that makes one wonder: fixing a flaw is one thing, but how do you fix a feature? Several quotes claim that software patches would mean the performance speed of affected chips (that would be all of them) would go down by 25-30% or so. Which is bad enough, but the problem is not -limited to- software. And patching up hardware/firmware issues with software can’t be easy, if even viable.

That would make one suspect that even if a software patch can suppress this feature, as long as the architecture doesn’t change, it can still function as a backdoor. Apple may say there are no known exploits of it, but would they tell if for instance intelligence services used it? Or other parties that cannot be labeled ‘hackers’?

All that ties in seemingly seamlessly with Apple shareholders expressing their worries about the effect of their investments. Though you might want to wonder if their worries would be the same if Apple shares plummeted tomorrow.

Two Major Apple Shareholders Push for Study of iPhone Addiction in Children

..activist investor Jana Partners and the California State Teachers’ Retirement System urged Apple to create ways for parents to restrict children’s access to their mobile phones. They also want the company to study the effects of heavy usage on mental health.

There are a few things off with this. First, there’s the risk of these kids’ iPhones being hacked through the flaw, feature, backdoor mentioned above. That’s potentially a lot worse for them. Then, there’s the obvious fact that parents can simply take their children’s phones away, there’s no better way to restrict access. Why should that be Apple’s responsibility?

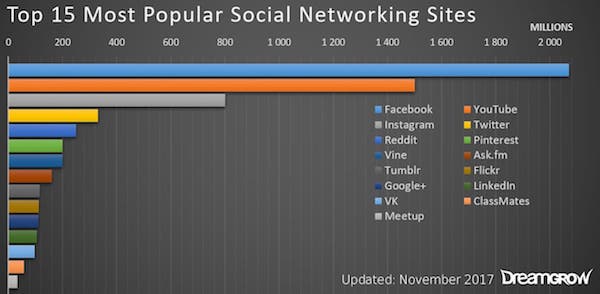

But most of all, children are addicted to their phones because of the content, and Apple, though they would wish it were different, are not the major content providers. That role is played by Alphabet/Google/YouTube and Facebook/Instagram, and to a lesser extent Snapchat and Twitter. And they are a much bigger threat than Apple is.

There has been a lot of talk about hate speech, fake news and election interference over the past year and change -and it won’t stop anytime soon, because it’s political gold dust. Germany, France, the UK, US and a whole slew of smaller nations have all tried to implicate Russia in all of these issues, and for good measure opposition parties to incumbent governments have been fingered too.

There are perhaps very obvious examples of all three topics, but the issue as a whole is far from clear. In Germany, Twitter accounts of the Alternative für Deutschland party have been blocked, but given that they now have seats in parliament, that is a tricky problem. Likewise, much of what the US MSM has been writing about Trump and his organization has proven unsubstantiated, and could therefore be labeled fake news. It isn’t to date, other than by the president himself, but who draws the line where?

The US election interference narrative is shaky, since it largely appears to rely on $100k or so in Facebook ads bought by some mysterious party, ads that are supposed to have been much more effective than many billions of dollars in campaign funding. The kind of thing that makes you think: if the Russians are so much better at this than we are, we might as well hand it all over to them right now.

The main problem with the election interference stories is that none of it has ever been proven. Not even the $100k+ in Facebook ads; they might just as well have originated in Langley and we only have Langley’s word for any alternative claims. Overall, defining what is hate speech and what is fake news seems to come down far too much to opinions rather than facts, and that has us sliding down a supremely slippery slope, not exactly a place to build solid policy on.

So how and why can Facebook and Google be trusted to provide objective assessments on what is fake news and hate speech vs what is not? That is what they are being tasked with at present. They hires tens of thousands of people to do that ‘job’. But what are these people’s qualifications? How do these companies make sure political bias is kept out of the process? Do they even want to keep it out, or do Zuckerberg, Brin, Schmidt want to confirm their own bias?

It’s hard to see how the decision making process, fake vs real news, hate speech, political meddling, will not inevitably become one guided and goaded by intelligence services, because they are the ones who claim to have both the knowledge and the evidence upon which these decisions must be based. But US intelligence is not politically neutral, and they don’t share the sources of their ‘evidence’.

Still, none of that is the main problem here either. Though we’re getting closer.

Over the holidays, I saw a movie in which there was a teachers’ Christmas party at some highschool. All the teachers were bored and sat or stood in silence looking at nothing. And I realized that kind of scene no longer exists today. Though the movie was just 10-15 years old, there have been some profound changes. At a party like that, or at a busstop, in a bus or train, a waiting room or even a family dinner, everyone is now glued to their smartphone. Even people walking down the street are. And those driving down the street.

What all these people seem to do most is look at their Facebook/Instagram/Snapchat etc. accounts. And apart from the profound changes to human interaction in public spaces, there are other things that deserve attention. Like for instance that while you think you’re having private conversations with your friends and family, there’s nothing private about it. Everything you tell your ‘friends’ de facto becomes property of the owners of the app you’re sharing it on.

When your friends read what you just wrote, they see not only that but also ads that the app displays alongside it. That means Facebook makes money from your friends’ attention for your words. Since Facebook reached 2 billion active users in 2017, that adds up. And they don’t have to do anything for that, other than keep the channels open.

But that is not the worst part. Facebook not only makes money off your contact with family and friends, something most people would probably find comparatively innocent, it also ‘spies’ on you. At the very least, its algorithms actively scour its databases to suggest possible additional friends, and/or people you might know. That can lead to unexpected and potentially undesirable results:

Facebook Figured Out My Family Secrets, And It Won’t Tell Me How

Rebecca Porter and I were strangers, as far as I knew. Facebook, however, thought we might be connected. Her name popped up this winter on my list of “People You May Know”, the social network’s roster of potential new online friends for me. The People You May Know feature is notorious for its uncanny ability to recognise who you associate with in real life. It has mystified and disconcerted Facebook users by showing them an old boss, a one-night-stand, or someone they just ran into on the street.

These friend suggestions go far beyond mundane linking of schoolmates or colleagues. Over the years, I’d been told many weird stories about them, such as when a psychiatrist told me that her patients were being recommended to one another, indirectly outing their medical issues.

What makes the results so unsettling is the range of data sources – location information, activity on other apps, facial recognition on photographs – that Facebook has at its disposal to cross-check its users against one another [..] . People are generally aware that Facebook is keeping tabs on who they are and how they use the network, but the depth and persistence of that monitoring is hard to grasp. And People You May Know, or “PYMK” in the company’s internal shorthand, is a black box.

To try to get a look into that black box – and the unknown and apparently aggressive data collection that feeds it – I began downloading and saving the list of people Facebook recommended to me, to see who came up, and what patterns might emerge. On any given day, it tended to recommend about 160 people, some of them over and over again; over the course of the winter, it suggested more than 1400 different people to me. About 200, or 15% of them, were, in fact, people I knew, but the rest appeared to be strangers.

And then there was Rebecca Porter. She showed up on the list after about a month: An older woman, living in Ohio, with whom I had no Facebook friends in common. I did not recognise her, but her last name was familiar. My biological grandfather is a man I’ve never met, with the last name Porter, who abandoned my father when he was a baby. My father was adopted by a man whose last name was Hill, and he didn’t find out about his biological father until adulthood.

The gist of the tale is clear: Someone being introduced by Facebook to someone (s)he never knew, and may not have wanted to know, or know about him/her.

But we’re still skirting the real problems. Though by now you may want to give it all another thought. The real problem is that by giving out the information on Facebook, even if it all seems completely harmless and innocent to you, you have become Big Brother.

That may sound over the top, and I wouldn’t want to go into popular innuendo that the NSA has started either Facebook or Bitcoin, but it’s obvious that when Google’s and Facebook’s algorithms can dig up so much information on people and the links between them, the intelligence community wants a piece of that. Google/Alphabet’s CEO (he’s leaving that post soon) Eric Schmidt is the head of DOD’s Defense Innovation Board for a reason, and he has been close to the Democratic Party core for years.

It all fits too well to be discarded. It’s inevitable that the NSA, the CIA have recognized the potential of Big Tech for spying on Americans -and everyone else- for a while now. What you write on Facebook may seem harmless, but the algorithms can do more with it than -quite literally- is ‘dreamt of in your philosophy’.

And so Pirate Bay co-founder Peter Sunde is accurate in recognizing the symptoms, but not in diagnosing the underlying affliction. Mark Zuckerberg is not the dictator, and Trump is not in control of the data. They are mere conduits, and the buck stops elsewhere. We’ve centralized all our data to Big Brother.

We’ve Centralized All Of Our Data To A Guy Called Mark Zuckerberg

“Everything has gone wrong. That’s the thing, it’s not about what will happen in the future it’s about what’s going on right now. We’ve centralized all of our data to a guy called Mark Zuckerberg, who’s basically the biggest dictator in the world as he wasn’t elected by anyone. Trump is basically in control over this data that Zuckerberg has, so I think we’re already there. Everything that could go wrong has gone wrong and I don’t think there’s a way for us to stop it.”

One of the most important things to realize is that the problem isn’t a technological one. “The internet was made to be decentralized,” says Sunde, “but we keep centralizing everything on top of the internet.” To support this, Sunde points out that in the last 10 years, almost every up-and-coming tech company or website has been bought by the big five: Amazon, Google, Apple, Microsoft and Facebook. The ones that manage to escape the reach of the giants, often end up adding to the centralization.

We don’t create things anymore, instead we just have virtual things. Uber, Alibaba and Airbnb, for example, do they have products? No. We went from this product-based model, to virtual product, to virtually no product what so ever. This is the centralization process going on. Although we should be aware that the current effects of centralization, we shouldn’t overlook that it’s only going to get worse. There are a lot of upcoming tech-based services that are at risk of becoming centralized, which could have a huge impact on our daily lives.

[..] Feeling a bit optimistic, I asked Sunde whether we could still fight for decentralization and bring the power back to the people. His answer was simple. “No. We lost this fight a long time ago. The only way we can do any difference is by limiting the powers of these companies – by governments stepping in – but unfortunately the EU or the US don’t seem to have any interest in doing this.”

The model is absolutely perfect, and it’s not even one that was built on purpose. When Facebook started, Zuckerberg et al were not thinking about 2 billion active users. Nor were they aiming for algorithms that could so pervasively document people’s lives and their connections to others across space and time, or that these people themselves would provide them with the information that can be used to build files on them that can at some point in their lives be used against them, if that is deemed necessary.

And this is just early innings. This is before artificial intelligence and virtual -and/or augmented- reality have really taken off. But when AI is truly unleashed upon the internet, everyone’s seemingly innocent everyday stories as told to family and friends will be a treasure trove when it comes to building the pictures of their lives that are useful to governments and their intelligence agencies.

These platforms are labeled social media, and we might want to think about that label. It’s nice to be able to communicate with people who are not where you find yourself at a given point in time, but there’s a price to pay for that; actually, multiple prices. We’ve likely all found ourselves in situations by now where people act less, not more sociable precisely because of social media; they’re just communicating with their phones, not their immediate surroundings.

Somehow at times that feels a whole new -big- step for mankind: you’re together but you’re not. We are social animals but we attempt to transfer our social lives across space and time to moments and places we’re not at. And we have a gadget that does that for us. That is a puzzling development, and from where I’m sitting a worrying one as well. Somewhere along the same lines as being able to watch ever better photography from ever more remote nature scenes as that nature is being destroyed.

Still, few of us would have imagined that when 1984 finally happened, we would ourselves turn out to be Big Brother, but that’s what we are. Or if you want you can insist we’re merely feeding the monster. Same difference. But maybe that too is just a small step for man and a giant leap for mankind. Just like the never before seen quality footage of animals about to go extinct.

Who is anyone of us to judge any of it? It’s confusing, it throws us off everything we were taught is normal and lasting, and that’s only when we pay attention, and it all happens at lightning speed.

One thing we can say though: none of this is innocent. Whatever it is mankind is leaping into, we left innocence behind for good.

By Raul Ilargi Meijer

Website: http://theautomaticearth.com (provides unique analysis of economics, finance, politics and social dynamics in the context of Complexity Theory)

© 2018 Copyright Raul I Meijer - All Rights Reserved Disclaimer: The above is a matter of opinion provided for general information purposes only and is not intended as investment advice. Information and analysis above are derived from sources and utilising methods believed to be reliable, but we cannot accept responsibility for any losses you may incur as a result of this analysis. Individuals should consult with their personal financial advisors.

Raul Ilargi Meijer Archive |

© 2005-2022 http://www.MarketOracle.co.uk - The Market Oracle is a FREE Daily Financial Markets Analysis & Forecasting online publication.